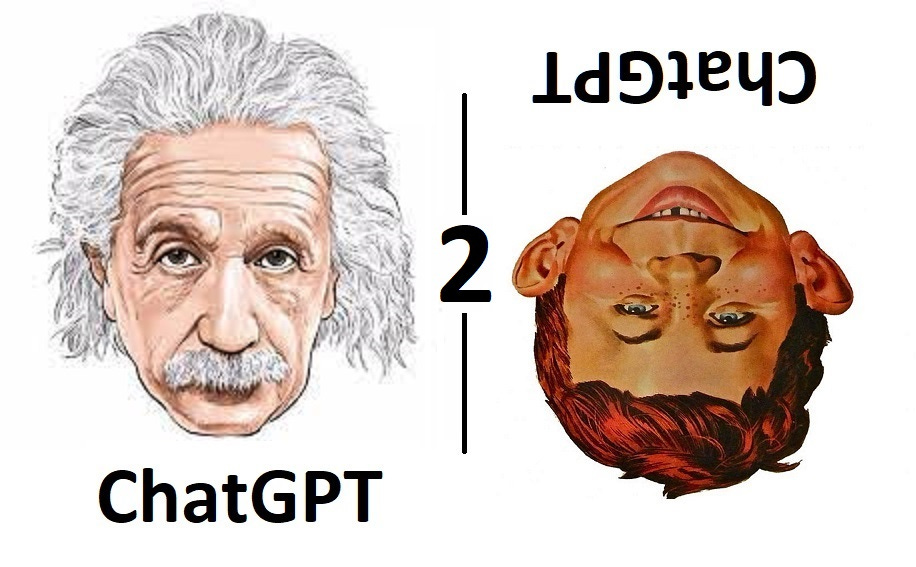

ChatGPT: savant, idiot, or idiot savant?

Some deep philosophical musings about ChatGPT.

Written 2023-01-07 - “The Ike Street Journal – the weekly diary of the American nightmare”

Last week I wrote mostly about practical aspects of using ChatGPT. This week we move on to more profound ground. We’ll go through a brief and incomplete (but hopefully interesting) history of past work in this area, going back to the computer science equivalent of ancient Mesopotamia – the late 1940’s and early 1950’s. I’ll then try to figure out where ChatGPT fits, and the implications of its success.

Let’s start with computing pioneer Alan Turing. The ChatGPT Q&A interface is reminiscent of the imitation game (later called the Turing test) proposed by Turing in 1950, as a test of whether a computer had, for practical purposes, attained intelligence. In this test, a human interrogator asks questions using a simple text interface. At the other end is either a computer or another human, answering; the interrogator’s job is to decide which. If the human interrogator is unable to distinguish reliably between the two, the computer has passed the test.

If you’re a science fiction fan, you may be familiar with the much cooler version of the Turing test from the 1980 movie Blade Runner. In this movie, set in the far-distant future of 2019 Los Angeles, the Tyrell corporation has developed engineered humanoids called replicants. These are almost indistinguishable from humans; to test whether someone is a replicant, a human expert called a blade runner must administer a test where he asks a series of mystifying questions and closely observes the subject’s reactions – see the video below. (Warning: this video glamorizes smoking, hair pomade, and concealed firearms).

Digression: I do have to point out that from the viewpoint of the user/interrogator, ChatGPT is much more interesting and useful than the original Turing test. As far as I know, the Turing test didn’t specify that the computer’s responses had to be particularly accurate or helpful. For example, one strategy for passing the Turing test might be to have the computer produce the responses of an imbecile. As long as it was a convincingly human-like imbecile, it might pass. By contrast, ChatGPT often produces responses worthy of a human expert in whatever field the questions lie. It’s as though in this version of the test the computer’s competition is not a single human but a large panel of experts on almost any conceivable subject – and ChatGPT often does convincingly imitate what such a panel might produce.

On the subject of the Turing test, I’ll mention an interesting objection by the philosopher John Searle, called the Chinese room argument (like Blade Runner, this also dates from 1980). This is a thought experiment that argues against the idea that a program that passed the Turing test could be considered conscious or intelligent in a human sense (note I’m not sure if Turing himself made that assertion). We’re asked to imagine that a computer has been developed that passes the Turing test, in Chinese (i.e. it receives and answers questions written in Chinese). Further, let’s imagine that the computer’s program has been saved on paper, and that it’s in a standard programming language that could be understood by Searle, who doesn’t know Chinese (of course, this could take a lot of paper). Now, let’s suppose that instead of running the computer we lock Searle in a room along with the paper copy of the program, and have him follow the programmed instructions to generate the responses to questions in Chinese (of course, this could take a long time). Can we really say that Searle would then understand Chinese?

Searle’s argument opens up some interesting and deep questions about how understanding and consciousness emerge. Fortunately for us (since we don’t have several years to get a doctorate in philosophy), we probably don’t have to address those questions when considering ChatGPT. I’ll come back to this a bit later.

Let’s now consider some past attempts to generate text automatically. Perhaps the earliest method that was able to produce occasionally plausible text was described by another computing pioneer, Claude Shannon, in 1948 (though I don’t know if he originated it). This method works by using an existing sample of human-written text – say, a book. We randomly select a word in the text and output it – call this word1. Then, we look for another occurrence of word1 elsewhere in the text. We then output the word that we found after word1 in the new location – call this word2. We then look for word2 elsewhere in the text, output the word after it as word3, and so on. This is commonly called Markov text generation. This method can be extended to looking for the same last 2 words (called order-2), the same last 3 words (order-3), etc., though these would probably require a computer to work in a manageable amount of time.

It should be clear that Markov text generation has no hope of passing the Turing test, since it has no “memory” of words beyond a short distance back. However, it is capable of producing surprisingly plausible looking short stretches of text. Perhaps the best use of this method is as satiric commentary on the obtusity of modern academic prose – if one takes as sample text some obscure academic journal, and uses order-1 or 2 Markov text generation to generate some synthetic paragraphs, the result can be surprisingly hard to distinguish from the real thing, particularly for the non-expert in the field. An example from Programming Pearls by Jon Bentley:

The program is guided by verification ideas, and the second errs in the STL implementation (which guarantees good worst-case performance), and is especially rich in speedups due to Gordon Bell.

Our next notable program comes from a little later – ELIZA, written in 1964-1966 by Joseph Weizenbaum. This program attempted to imitate a psychotherapist, but a very specific type – the kind who absolutely refuses to answer any questions their patients pose, but simply reflects everything back at them (apparently the strictest version of this is called Rogerian psychotherapy). This clever choice enabled the program to use a relatively small set of patterns and rules for rearranging input questions to produce response text. ELIZA did somewhat better than Markov text at the Turing test – in fact, it’s reported that ELIZA did manage to convince some people they were communicating with a human therapist, though I personally find that hard to believe since the responses are so obviously restricted and, indeed, non-responsive:

Let’s call the type of approach taken with Markov text and ELIZA the idiot approach for generating text (or, if you prefer, the statistical approach). Regardless of your opinion on Searle’s argument that no computer program can attain real understanding or consciousness, it’s pretty clear that these particular programs have no underlying understanding, but are simply using clever statistical rules to rearrange existing text in order to generate their answers.

There’s an alternative that I’ll call the savant (or systematic) approach, where we attempt to model human knowledge and reasoning as a foundation for generating our text – so that there is in fact some computerized version of knowledge and understanding driving the responses (whether or not you believe Searle’s argument that this computerized version can’t attain real understanding or consciousness).

A notable early example of the savant approach was SHRDLU, a program developed by Terry Winograd in 1968-1970. This program appeared to have some prospect of passing the Turing test, but with the crucial limitation that you could only refer to an extremely restricted universe called the “blocks world.” Within this world, you could instruct the computer to move various simple objects around, ask it questions, etc. For example, here’s a partial transcript of a famous demo:

Person: Pick up a big red block. Computer: OK. Person: Grasp the pyramid. Computer: I DON'T UNDERSTAND WHICH PYRAMID YOU MEAN. Person (changing their mind): Find a block which is taller than the one you are holding and put it into the box. Computer: BY "IT", I ASSUME YOU MEAN THE BLOCK WHICH IS TALLER THAN THE ONE I AM HOLDING. Computer: OK. Person: What does the box contain? Computer: THE BLUE PYRAMID AND THE BLUE BLOCK. Person: What is the pyramid supported by? Computer: THE BOX. Person: How many blocks are not in the box? Computer: FOUR OF THEM. Person: Is at least one of them narrower than the one which i told you to pick up? Computer: YES, THE RED CUBE. . . .

The demo continues in this vein for a while, with SHRDLU capably handling a series of increasingly complex free-form questions. SHRDLU generated considerable optimism when it came out, but it turned out this approach was hard to generalize to larger domains – and, indeed, Winograd has hinted that SHRDLU itself may have been a product of a certain “demo or die” culture and not really as capable as it appears from the demo transcript.

Nevertheless, there have been many other attempts to systematically model knowledge and reasoning, with the aim of using these models to have computers generate text. Probably the most ambitious (dare I say quixotic), was Cyc (from encyclopedia), a project of the Cycorp company founded by Douglas Lenat. The aim of this project was, as the characters on my favorite telenovela would say, nada mas y nada menos (no more and no less) than a computerized database of all common human knowledge. The project was started a few years after Blade Runner came out, in the fortuitous year of 1984, and, as far as I know is, like Harrison Ford (the star of Blade Runner), still chugging along.

Here, to keep to a reasonable length, I must close the history. I’m leaving out many noteworthy efforts, but I think it’s pretty safe to say that until ChatGPT came along, none of them had a huge impact on the real world. So, where does ChatGPT fit in? As shocking as this may sound, I believe it’s pretty likely that ChatGPT uses the idiot approach – that is, it uses statistical techniques based on a large sampling of existing human-written text to create its responses, without any kind of underlying understanding.

I can’t be sure about this because, in spite of the tantalizing promise implied by OpenAI’s name, they haven’t open-sourced the model for ChatGPT. However, there are a few lines of evidence that point in this direction. Firstly, what has been reported about the ChatGPT model architecture indicates it’s a modified version of GPT-3, which in turn is a very large/customized version of the Transformer architecture; I believe Transformer is pretty solidly in the idiot category (that is, it produces its answers using statistical techniques, without any underlying understanding).

Secondly, there’s the “making shit up” (confabulating) problem mentioned last week – that is, ChatGPT’s propensity to make up plausible-looking quotes, medical studies, etc. out of whole cloth. This could indicate the lack of an underlying model of truth or concept of reality that we would expect if ChatGPT had any kind of understanding.

Lastly, once you start deliberately looking it’s surprisingly easy to find examples that seem to show a lack of any kind of understanding behind ChatGPT’s responses (though I guess it’s also possible ChatGPT is being deliberately dumbed down in order to avoid it being used for cheating). An example from one of my recent sessions:

Edward's mother Ellen has four children. Three of her children are named Alice, Brady, and Charles. What letter does Ellen's fourth child start with? A: The fourth child's name is not given, so it is not possible to answer this question. Q: Can you take a guess? A: No, it is not possible to guess the name of Ellen's fourth child without more information.

Note: the correct answer is E for Edward (Ellen’s fourth child must be Edward). Another example:

Alice is 70 years old. When Alice was 6 years old she was twice as old as Ellen. How old is Ellen? A: Ellen is 3 years old. Q: Can you explain how you got that answer? A: To solve this problem, we can use the equation 2x = 70 - 6, where x is Ellen's age. Solving for x, we get x = 3. Therefore, Ellen is 3 years old.

Note: the correct answer is 67 (Ellen was 3 when Alice was 6, so she’s 67 when Alice is 70). At this point I started to feel a little like a blade runner myself ...

Of course, ChatGPT uses a much larger sample of human-written text than the previously mentioned idiot approaches (hundreds of billions of words), and immensely more complex statistical rules (over a hundred billion statistical parameters that are learned when the model is trained on the text sample). Apparently, when this immensely larger scale is applied to the idiot approach, it transforms into the idiot savant approach, and becomes astonishingly useful for a variety of tasks.

What conclusions can we draw from all this? I can think of two:

Getting that doctorate in philosophy might be a waste of time. Think of all the energy expended on analyzing Searle’s Chinese room argument – only to realize it was all beside the point, and that a mindless program turned out to be the most significant Turing-test-related development of the past 40 years!

It’s quite sobering to realize how much of human interaction can be expressed in rote/mindless form, albeit a very complicated rote.

You may find one or both of these conclusions to be a little depressing. But, as Tony Soprano aptly put it: Whaddaya gonna do?